Farewell C1

Yesterday in a datacenter somewhere in France there was suddenly an eery silence as the last remaining racks fell silent for the first time in a long time. As of yesterday, 1st of September 2021, Scaleway turned off their C1 ARM servers.

I know because I still had one trusty little C1 server until today, a server I have had since it was brought online 7 years ago. It was never the fastest, or the biggest server I’ve had, but it was my little dedicated server. It never complained, never crashed, never rebooted, just kept running, serving my homepage and some side-projects.

History of C1

If you are not familiar with the C1, and why it deserves its own little obituary, then let me give you a bit of backstory.

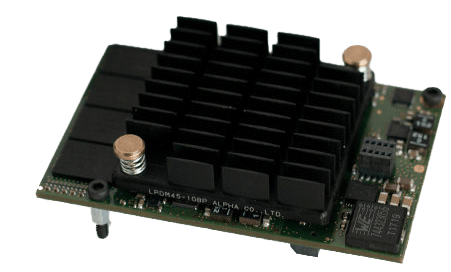

The C1 was introduced around 2015, first as a free 15-minute trial at labs.online.net 0 and then launched as a commercial product under the brand Scalewayarchive.is/scaleway. The C1’s were an interesting take on the virtualisation market, instead of cramming in as many virtualised hosts on a powerful machine, they crammed in as many tiny SOC’s they could into a rack. They built a tiny used custom SoC’s backed by a shared disk storage. A bit like a cloud-hosted Raspberry Pi, but with a network attached SSD disk. On a public and static IP with good connectivity.

What’s the big deal?

There is no big deal, for most people or project a virtual server will do just fine or even be a better choice than dedicated hardware. But there are a few reasons I like small dedicated servers. One is that with a dedicated machine, you can be sure that you are always getting the same performance(barring running multiple things on it). Virtual servers might be faster in bursts, but they are generally oversubscribed and if you are unlucky, you might have very varying performance depending on how busy your neighbours are. If it’s fast now, it will be as fast tomorrow. The C1 was never really fast, though, which I took as a fun challenge. I know if I could get X and Y working well on this limited machine, then if I ever need to scale it up it will be extremely fast on a top of the line server.

It’s more secure

Maybe, in theory at least. For a VM, when doing threat modelling, you should always consider the risk of someone else on the host escaping their VM and accessing your VM and files. Back in 2015 there had been a few VM escapes, but the future would bring many more and a whole new range of side channel attacks against shared processors or memory. My little dedicated ARM server never had to worry about Spectre, Meltdown, Rowhammer or any other of the processor bugs which has rattled the whole VPS ecosystem. 123. Being able to just go “oh, that’s interesting” when there is news of a new Spectre-like attack without having to even consider my little C1 loosing performance or needing to be rebooted was quite nice.

Another benefit was the 4 GiB of RAM, in 2015 that was unheard of for a €2.99 server (and it is still today I think). And that is “dedicated” ram. Which can’t as easily be accessed by the provider, which is important if you care about it. Although I bet, if Scaleway wanted, they could figure out some way to read it out using something like pcileech. [ Update-2021-09-06: I was informed by one of lead designers that it was designed with attacks like these in mind, so at least any physical attack would not have been straight forward ].

What next?

Life goes on, except for the C1. I do wonder what will happen to them. Maybe they will end up on a flea market somewhere. I am not ready to move my personal things to a VPS just yet, but there are not that many cheap dedicated alternatives out there. The only one (I’ve found) at that price point is Kimsufi, but they are mostly out of stock and lower specced.

In the end, I decided to stay with what I know and stay with another of Scaleway’s dedicated offers, an Atom C2350which has served me well for testing and I have now migrated everything to.

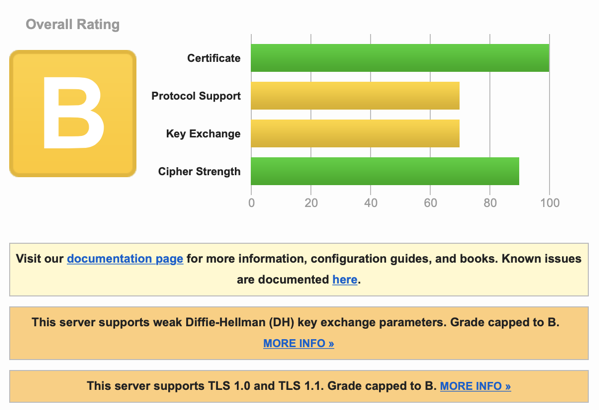

Turns out it was because I had, out of habit, configured the server with a “modern” list of TLS ciphers. And the poor old iOS 6 didn’t support any of them.

Turns out it was because I had, out of habit, configured the server with a “modern” list of TLS ciphers. And the poor old iOS 6 didn’t support any of them.

Let’s take a step back and think about what the score actually means.

Let’s take a step back and think about what the score actually means.