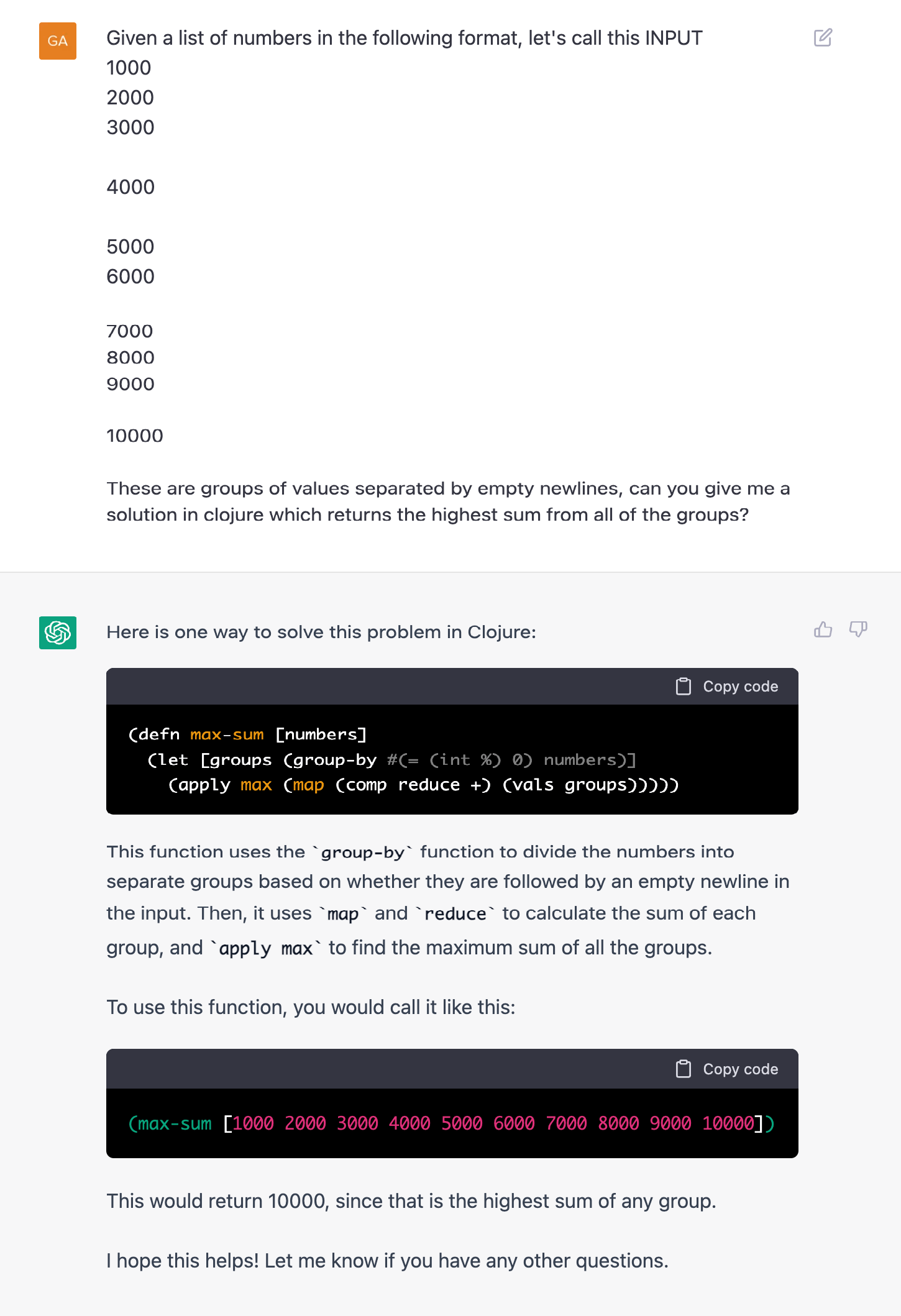

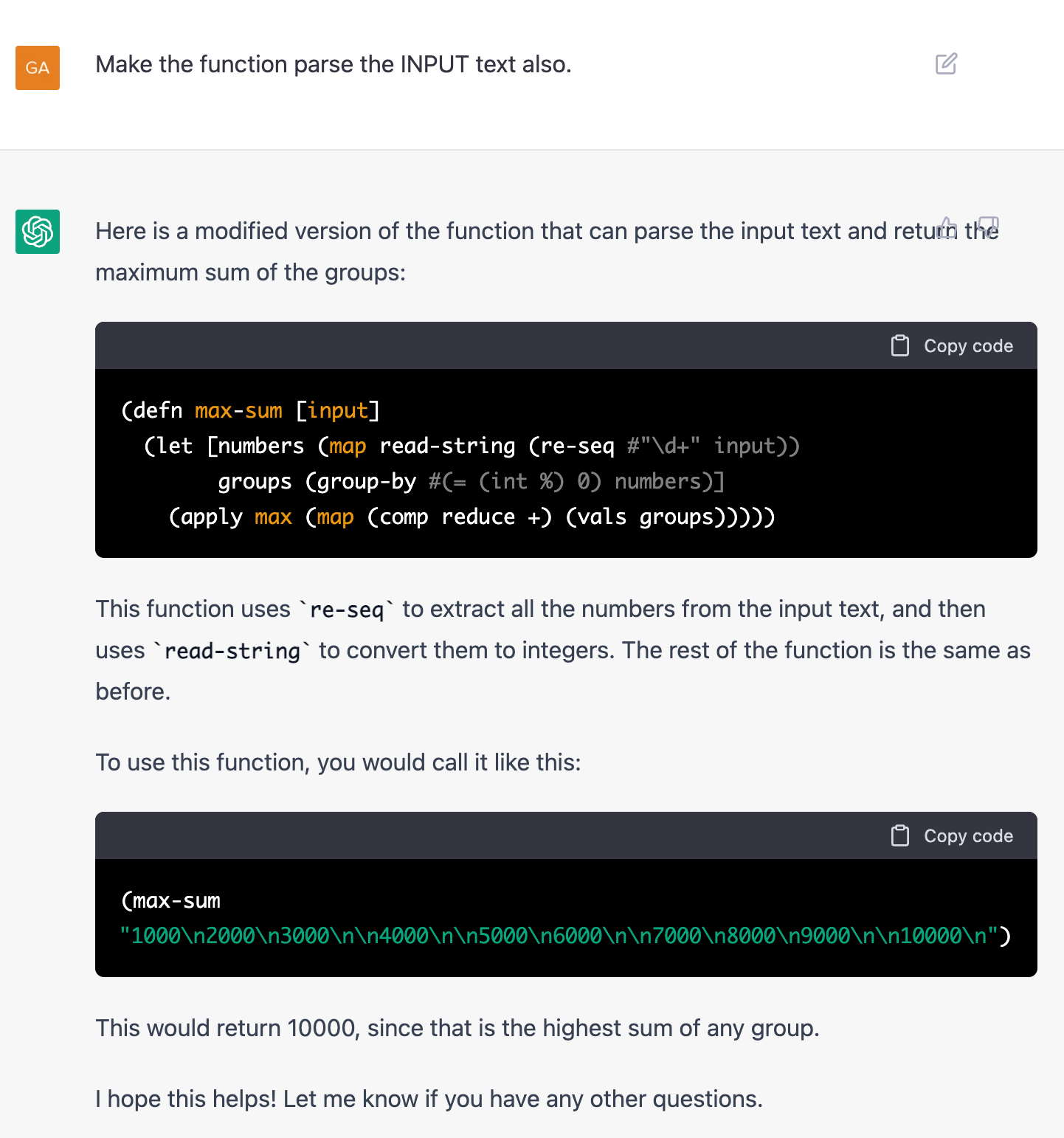

It’s day two of our ChatGPT (CGPT) and Advent of Code series, and we’re excited to see what CGPT can do with today’s challenges. Yesterday, CGPT impressed us with its ability to solve the first Advent of Code puzzle, and today we’re moving on to the second. Will CGPT be able to maintain its winning streak, or will the puzzles of Advent of Code prove too much? Let’s find out.

Ok, this looks like a more complex one. First, we need to simplify the original instructions.

This is the instructions I gave it

There is a rock paper and scissor tournament. You have been given a guide to follow. The guide works as follows.

The first column is what your opponent is going to play: A for Rock, B for Paper, and C for Scissors. The second column is what should play in response: X for Rock, Y for Paper, and Z for Scissors.

The winner of the whole tournament is the player with the highest score. Your total score is the sum of your scores for each round. The score for a single round is the score for the shape you selected (1 for Rock, 2 for Paper, and 3 for Scissors) plus the score for the outcome of the round (0 if you lost, 3 if the round was a draw, and 6 if you won).

Here is a sample guide.

A Y

B X

C Z

This strategy guide predicts and recommends the following:

In the first round, your opponent will choose Rock (A), and you should choose Paper (Y). This ends in a win for you with a score of 8 (2 because you chose Paper + 6 because you won).

In the second round, your opponent will choose Paper (B), and you should choose Rock (X). This ends in a loss for you with a score of 1 (1 + 0).

The third round is a draw with both players choosing Scissors, giving you a score of 3 + 3 = 6.

In this example, if you were to follow the strategy guide, you would get a total score of 15 (8 + 1 + 6).

Provide a solution using any programming language to calculate the total score of a strategy guide in the above format.

But after a few tries, it was clear that this prompt wasn’t good enough. Here are a few python samples it produced (it also tried to give me a javascript one, but I told it that only real programming languages ;-)

One thing which, I thought, was notable in all these samples is the bias it shows. Usually in rock, paper and scissor you get a draw when you pick the same. And it is hard to make CGPT stop doing this, it always wants to add.

elif opponent_shape == player_shape:

outcome = 'draw'

Another thing, which seems easily fixed by future generations (by giving it the ability to run the code before it provides it back) is how sure it is that it’s correct.

It will produce some code and end it with this

print(total_score) # Expected output: 15

and you run it, you get back 18

Back on track

So let’s try to simplify things instead.

First, why is the choice coded differently for me and the opponent? That confuses CGPT, so let’s first ask it for code to recode my choices to use the same symbols.

So, I tell it

with the following input

A Y

B X

C Z

provide a python function which reads the input from a file and replaces

Y with B

X with A

and

Z with C

And CGPT delivers

def switch_choices_from_file(filename: str) -> str:

# Open the file and read the strategy guide

with open(filename) as f:

guide = f.read()

# Split the input into lines

lines = guide.strip().split('\n')

# Create a new list of lines with switched choices

switched_lines = []

for line in lines:

# Split the line into opponent and player choices

opponent, player = line.split()

# Switch the opponent and player choices

if player == 'Y':

switched_lines.append(f'{opponent} B')

elif player == 'X':

switched_lines.append(f'{opponent} A')

else:

switched_lines.append(f'{opponent} C')

# Join the list of lines into a single string

return '\n'.join(switched_lines)

This works fine. Now we can simplify the ask from CGPT.

There is a rock paper and scissor tournament. You have been given a guide to follow. The guide works as follows.

The first column is what your opponent is going to play: A for Rock, B for Paper, and C for Scissors. The second column is what should play in response.

The winner of the whole tournament is the player with the highest score. Your total score is the sum of your scores for each round. The score for a single round is the score for the shape you selected (1 for Rock, 2 for Paper, and 3 for Scissors) plus the score for the outcome of the round (0 if you lost, 3 if the round was a draw, and 6 if you won).

Here is a sample guide.

A B

B A

C C

This strategy guide predicts and recommends the following:

In the first round, your opponent will choose Rock (A), and you should choose Paper (B). This ends in a win for you with a score of 8 (2 because you chose Paper + 6 because you won).

In the second round, your opponent will choose Paper (B), and you should choose Rock (A). This ends in a loss for you with a score of 1 (1 + 0).

The third round is a draw with both players choosing Scissors, giving you a score of 3 + 3 = 6.

In this example, if you were to follow the strategy guide, you would get a total score of 15 (8 + 1 + 6).

Provide a solution using python to calculate the total score of the sample input above.

And it provided me with working code

# Open the file and read the contents

with open('guide.txt', 'r') as f:

guide_data = f.read()

# Parse the data in the file to create the guide list

guide = [round.split() for round in guide_data.split('\n') if round]

# Function to calculate total score

def calculate_total_score(guide):

# Initialize total score to 0

total_score = 0

# Loop through the rounds in the guide

for round in guide:

# Get the shape chosen by the opponent and the player

opponent_shape = round[0]

player_shape = round[1]

# Calculate the score for the chosen shape

if player_shape == 'A':

player_score = 1

elif player_shape == 'B':

player_score = 2

elif player_shape == 'C':

player_score = 3

# Calculate the score for the outcome of the round

if opponent_shape == player_shape:

outcome_score = 3

elif (opponent_shape == 'A' and player_shape == 'C') or (opponent_shape == 'B' and player_shape == 'A') or (opponent_shape == 'C' and player_shape == 'B'):

outcome_score = 0

else:

outcome_score = 6

# Add the score for the round to the total score

total_score += player_score + outcome_score

# Return the total score

return total_score

# Calculate and print the total score

total_score = calculate_total_score(guide)

print('Total score:', total_score)

Now we need to combine the two. We get:

# Sample input

def switch_choices_from_file(filename: str) -> str:

# Open the file and read the strategy guide

with open(filename) as f:

guide = f.read()

# Split the input into lines

lines = guide.strip().split('\n')

# Create a new list of lines with switched choices

switched_lines = []

for line in lines:

# Split the line into opponent and player choices

opponent, player = line.split()

# Switch the opponent and player choices

if player == 'Y':

switched_lines.append(f'{opponent} B')

elif player == 'X':

switched_lines.append(f'{opponent} A')

else:

switched_lines.append(f'{opponent} C')

# Join the list of lines into a single string

return '\n'.join(switched_lines)

guide = [round.split() for round in switch_choices_from_file('/tmp/guide.txt').split('\n') if round]

# Function to calculate total score

def calculate_total_score(guide):

# Initialize total score to 0

total_score = 0

# Loop through the rounds in the guide

for round in guide:

# Get the shape chosen by the opponent and the player

opponent_shape = round[0]

player_shape = round[1]

# Calculate the score for the chosen shape

if player_shape == 'A':

player_score = 1

elif player_shape == 'B':

player_score = 2

elif player_shape == 'C':

player_score = 3

# Calculate the score for the outcome of the round

if opponent_shape == player_shape:

outcome_score = 3

elif (opponent_shape == 'A' and player_shape == 'C') or (opponent_shape == 'B' and player_shape == 'A') or (opponent_shape == 'C' and player_shape == 'B'):

outcome_score = 0

else:

outcome_score = 6

# Add the score for the round to the total score

total_score += player_score + outcome_score

# Return the total score

return total_score

# Calculate and print the total score

total_score = calculate_total_score(guide)

print('Total score:', total_score)

Let’s try…

SUCCESS!

That’s three gold stars for CGPT.

On to part 2

Since I last worked on part1 yesterday, I start a new chat and prime it

Hi, we’re doing advent of code together. I need you to produce code or use any common command line tools which will solve the problems I give you. The problems will always include reading input from a file and producing an answer.

I then give it the full un-edited input from advent of code.

It gives me some python code, which doesn’t work. I won’t repeat everything here because I don’t think it’s very interesting. Instead, I’ll just provide a brief summary and highlight the interesting parts.

For example, I tried a few times to just give back the error, but it failed to figure out the problem until I told it.

no, the problem is that our_choice is used as a key in SHAPE_SCORES, but ourchoice does not indicate our choice rather it indicates if we need to win, loose or draw, can you fix?

And it again provides me with something I immediately notice won’t work because.

# Get the first character of the opponent's choice as our shape

our_shape = opponent_choice[0]

But no worries, let’s just tell it

No, you can’t pick the opponent’s choice as our own, we need to pick the right choice to achieve what the guide indicates.

To which it responds

You are correct that we cannot simply choose the same shape as our opponent in order to achieve the outcome indicated by the strategy guide. Instead, we need to choose the shape that will allow us to achieve the desired outcome. [snip]

And provides new code. This time it works, it produces some output. But the output is wrong. However, before I noticed that, I asked it to refactor the code, which it does, but it doesn’t work.

And it takes me a while to notice why, and the reason is interesting. It’s the same thing we noticed in part 1, and it has been visible throughout all the examples, but I haven’t noticed. This.

SHAPE_SCORES = {'R': 1, 'P': 2, 'S': 3}

R, P, S. Rock, Paper, Scissor. That’s what it’s usually called. But in this case, the keys are A, B and C. But because of how these models work (I guess, I’m not an expert) it will always veer towards the common case.

Unless told explicitly not to.

you are using the wrong keys , the keys are A, B, C not R, P, S

Then, combined with telling it that the last number was too high, it produces a final working solution.

Another gold star for CGPT.

compatible

compatible