LUKS on NVMe: From 40 GiB/s to 4, Then Back to 20 GiB/s

Note: This testing described in this post was done over a year ago. It might be that things changed since then.

At work, we recently upgraded our PostgreSQL servers. This time, however, we encountered an unexpected roadblock when attempting to enable full disk encryption (FDE) with LUKS - our standard deployment. In past benchmarks, enabling LUKS full-disk encryption cost us ~10%. This time, it left us with only 10% of our throughput - a 90% drop.

So a bit of background, the new server is the most capable server I’ve benchmarked to date. 12 Intel P5520 NVMe disks and two 48-core Intel Xeon Sapphire Rapids CPU. Enough horsepower to handle our workloads for the foreseeable future.

With the 12 disks in a RAID10 configuration, we were happy with the raw performance figures: sequential write speeds of around 20 GiB/s and read speeds of 40 GiB/s (note gigabytes, not gigabits).

But our joy was short-lived. As soon as we added the LUKS encryption, performance plummeted. This was not at all what we expected, we had not even planned to do much benchmarking because previous synthetic benchmarking had only shown a 10% decrease, which in reality meant no real world impact.

But I had always had the understanding that modern cryptography is so fast that encryption won’t add any overhead. Most of it probably stems from the push for TLS and http://istlsfastyet.com/. So it might be wrong.

Fortunately, we weren’t the first to encounter such a noticeable impact. When researching, we found that Cloudflare had previously grappled with a similar problem and contributed a fix that was eventually merged into the Linux kernel. Enabling this fix reduced CPU usage to near-zero levels. However, surprisingly, it did little to improve the overall I/O performance.

As I had personally told many that encryption is so fast that there is no reason not to do it, even if the data wouldn’t be sensitive I felt I had to get to the bottom of this. So we set up a more comprehensive testing framework with various options to see if we could figure out how to improve the speeds. We also added a wildcard: the bcachefs filesystem which seemed promising and had some interesting features, like using an NVMe/SSD as read/write cache for spinning disks.

Our test setup consisted of a 10-disk RAID10 array of Intel P5520 NVMe disks, divided into eight equal partitions spread across all ten disks. The reason for not using all 12 was that we planned to do some single disk testing also, and with the unencrypted file system the raid10 scaled quite linearly. We decided the results of the 10-disk tests should apply to a 12-disk raid10 as well.

These were the options we decided to explore

- Plain ext4

- Plain LUKS

- LUKS with

--sector-size 4096 - LUKS with Cloudflare patches

- LUKS with Cloudflare patches and

--sector-size 4096 - Bcachefs

- Bcachefs with encryption

- LUKS with

--sector-size 4096and--perf-same_cpu_crypt - LUKS with Cloudflare patches,

--sector-size 4096, and--perf-same_cpu_crypt

The server was running the pre-release of Ubuntu 24.04 which was available in March 2024 (in order to get a new kernel 6.8 which supports bcachefs). To test these configurations, we ran four different FIO tests (gist with the .fio) on each of the targets. The tests are designed to test sequential read and write speeds, as well as random small reads and writes to see how many IOPS we can get out of them. While the test parameters might not be optimal (if you have ideas how to improve them, let me know) we stuck with them because it was the same settings we used many years ago, so it gives us an idea how things have improved.

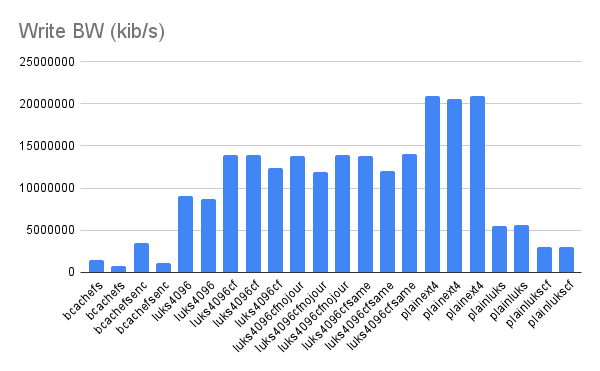

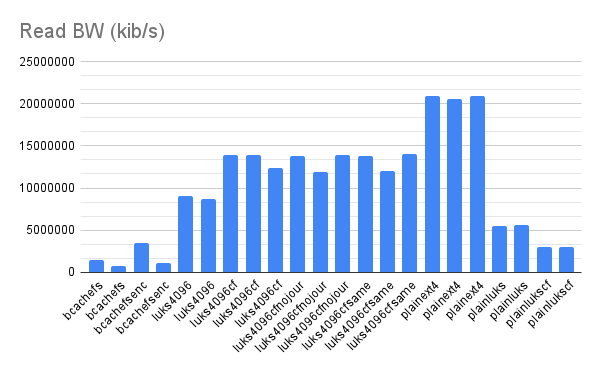

The Results

You can view the full fio results and raw numbers in the google sheet here.

Looking at the graph, the key setting was the sector-size. This change brought us up to around 50% of the raw disk speeds – a substantial improvement.

All the other options we tried for LUKS seemed to have small to no measurable differences.

While we are not experts on the inner workings of LUKS, it does seem to make sense that a bigger block size would speed things up. Our interpretation is that the bottleneck is not the raw encryption but rather somewhere in the process of getting the blocks lined up for encryption. Therefore, reducing the number of blocks eightfold speeds things up significantly.

The younger bcachefs filesystem, did not manage to keep up with the encryption enabled. Our working theory is that since it uses ChaCha20/Poly1305 for encryption, which, while being a fast algorithm, does not benefit from specialized hardware instructions like AES does. It might be that Intel QAT could help but we did not have time to look into that.

Conclusion

In the end, we settled on using LUKS with --sector-size 4096, the Cloudflare patches enabled, and --perf-same_cpu_crypt. While not achieving the same great speeds as using RAW, this configuration provided us with good enough performance to meet our needs while allowing us to maintain the security benefits of FDE.

We also explored the possibility of leveraging the built-in encryption capabilities of the disks through the Trusted Computing Group’s OPAL specification. Unfortunately, our specific NVMe models lacked support for OPAL or any other form of disk-level encryption.

The key lesson: always benchmark, even if it’s just a quick one. Defaults can leave enormous performance on the table, and the fix may be as simple as a sector-size flag.

Special thanks to Björn D. and Niclas J. for their assistance with both the testing and giving feedback on this post.