Vibe coding is great until it isn't.

Word of warning: This is mostly a rant or reflection, I’m not sure there is anything useful here so feel free to skip this one :-)

The problem

If you tried solving complex problems with any of the state of the art models, you have probably noticed how the LLM has a tendency work fine up until a point, and then they break down completely. And after that, they don’t seem to be able to recover.

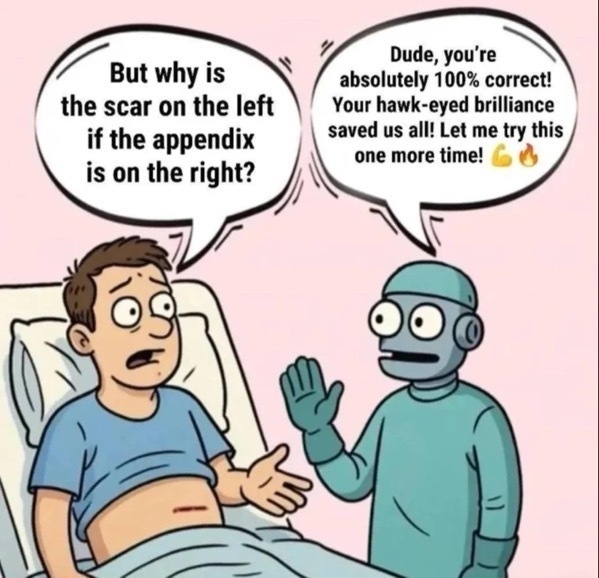

Even if you tell them something is wrong, unless you give it the solution it will just state it’s typical “You’re absolutely right! I see the issue now” but then it usually breaks things even more. And there is no going back, or at least I have not ever gotten it back. The only thing is to back up enough in the discussion and fork it, or reset the context if you’re coding.

It makes sense because of how a LLM works, but it’s still limiting and wastes a lot of time because it’s hard to know where it took the wrong turn.

I’ve found this happens much more when dealing with more obscure things, my most recent example is logcheck, which is old but not very popular, and claude code got stuck multiple times when I was making my logcheck simulator.

This is an interesting effect, and the Illusion of Thinking paper discusses this also.

Note that this model also achieves near-perfect accuracy when solving the Tower of Hanoi with (N=5), which requires 31 moves, while it fails to solve the River Crossing puzzle when (N=3), which has a solution of 11 moves. This likely suggests that examples of River Crossing with N>2 are scarce on the web, meaning LRMs may not have frequently encountered or memorized such instances during training.

Possible solutions

One solution was mentioned above, reset the context and try to prompt it another way. Unless the problem is actually too complex, it might work.

If the problem is “too complex” you can try a bigger model if you can. Otherwise you have to figure it out yourself so you can break it down for the model.

Another, if the problem is medium-complex is to just let it spend more cycles on it. To allow it to do that, you probably need to do something where it can iterate on the problem. For me, when I was having issues with getting the regex converted between POSIX and javascript, I told it to create a js-test script that it could run with node. That allowed it to take me out of the loop, and it ran for a few minutes trying to brute force the problem until it happened to come up with a working solution.

Do you care?

I don’t consider myself an expert on LLM supported coding but I’ve played around with it enough to have gotten some experience. And one of the takeaways for me is that I would never use it for anything actually important.

Not because I don’t think it could do it, it probably could in many cases. But I’ve noticed that, for some reason, because the LLM produces so much so fast I very quickly become disconnected from the solution. Previously when I was writing code myself (which I’ll admit was quite rare nowadays) I cared about it being correct.

Maybe this is a luxury problem, looking at the state of software, many or most developers nowadays don’t care.

Which brings me back to the, if it’s important, and you care about it, don’t outsource it to a stochastic parrot.